Data Processing

It is very easy to display the bounding box with YOLO. However, to be able to really do something with the data, you have to process it further.

My Use Case

My use case is as follows: I want to be able to recognize people with a first aid drone with a camera and fly directly towards them. The drone should then bring relief supplies as close as possible to the person and set them down with a winch. Of course, it is also important to pay attention to other objects, as these can be obstacles.

YOLO Box Coordinates

The bounding boxes are included in the result tensor. To make them easier to process, I convert them into Python lists:

for box in results[0].boxes.data.tolist():

print(box)

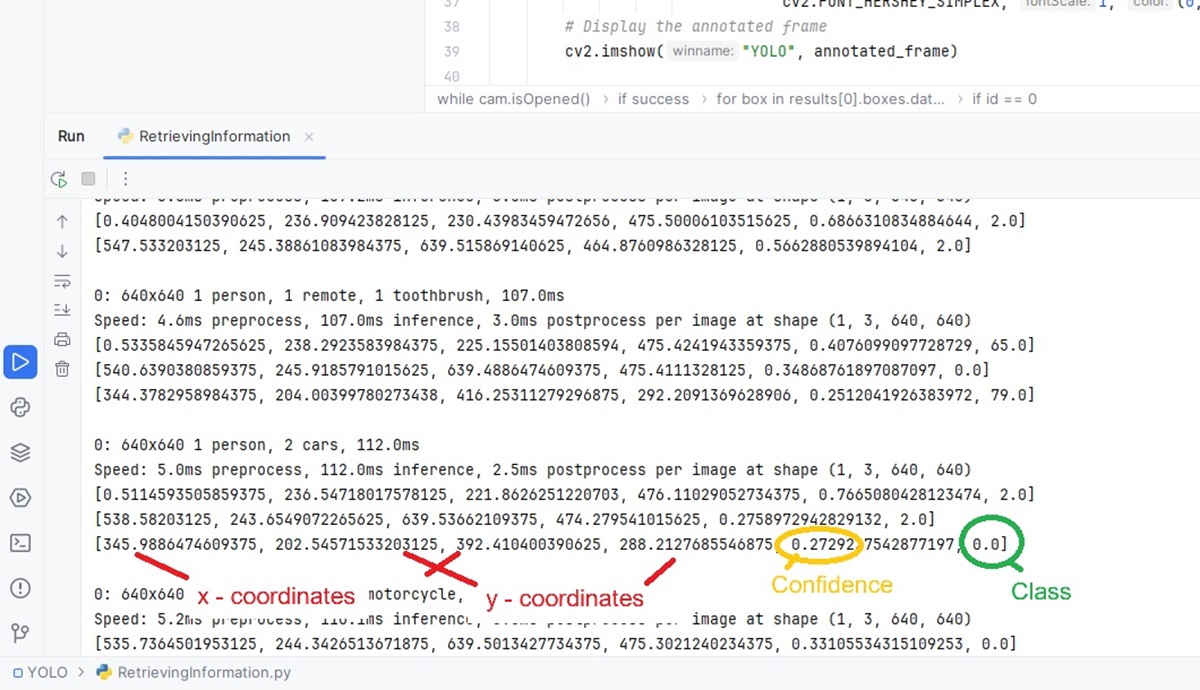

The results could look similar to this example:

0: 640x640 1 person, 2 cars, 112.0ms Speed: 5.0ms preprocess, 112.0ms inference, 2.5ms postprocess per image at shape (1, 3, 640, 640) [0.5114593505859375, 236.54718017578125, 221.8626251220703, 476.11029052734375, 0.7665080428123474, 2.0] [538.58203125, 243.6549072265625, 639.53662109375, 474.279541015625, 0.2758972942829132, 2.0] [345.9886474609375, 202.54571533203125, 392.410400390625, 288.2127685546875, 0.2729227542877197, 0.0]

Each box contains 6 values: The first four are the X and Y coordinates, then comes the calculated probability and the last value is the recognized class, where 2 stands for “car” and 0 for “person”.

Test

The following test is a simulation of the use case described above: a person (among other objects) is to be recognized. then the relative position of the bounding box in the image is to be used to calculate the direction in which the drone should fly: if the person is far to the left in the image, the drone must fly to the left, if the person is far to the right in the image, the drone must fly to the right.

Of course, the whole thing is just a simulation: instead of actually controlling a drone, the directional information is simply displayed in the image.

Test Program

import cv2

from ultralytics import YOLO

# Load the YOLO model

model = YOLO("yolo11n_ncnn_model")

# Open the video streamq

cam = cv2.VideoCapture(0)

# Loop through the video frames

while cam.isOpened():

# Read a frame from the video

success, frame = cam.read()

if success:

# Look for bottles only, in YOLO class 39 stands for bottles

results = model.predict(frame)

for box in results[0].boxes.data.tolist():

print(box)

# Visualize the results on the frame

annotated_frame = results[0].plot()

# Check all boxes ->

for box in results[0].boxes.data.tolist():

xmin, ymin, xmax, ymax, conf, id = box

# in case that a person got detected: Display directional information

if id == 0:

if xmax < 250:

cv2.putText(annotated_frame, "<----left>", (400, 50),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 255), 2)

if xmin > 400:

cv2.putText(annotated_frame, "right--->", (40, 50),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 255),2)

# Display the annotated frame

cv2.imshow("YOLO", annotated_frame)

# Break the loop if 'q' is pressed

if cv2.waitKey(1) == ord("q"):

break

else:

# Break the loop if the end of the video is reached

break

# Release the video capture object and close the display window

cam.release()

cv2.destroyAllWindows